|

Status: concluded Period: November 2016 - January 2021Funding: 99.500 € Funding organization: Italian Ministry of Education - University and Research; Comitato ICT Person(s) in charge: |

Knowledge Graphs (KGs) have emerged as a core abstraction for incorporating human knowledge into intelligent systems. This knowledge is encoded in a graph-based structure whose nodes represent real-world entities, while edges define multiple relations between these entities. KGs are gaining attention from both the industry and academia because they provide a flexible way to capture, organize, and query a large amount of multi-relational data.

Deep Learning on Graphs, also known as Graph Representation Learning (GRL), represents the standard toolbox to learn from graph data. GRL techniques are able to drive improvements for high-impact problems in different fields, such as content recommendation or drug discovery. Unlike other types of data such as images, learning from graph data requires specific methods. As defined by Michael Bronstein (Imperial College London) "these methods are based on some form of message passing on the graph allowing different nodes to exchange information".

Structured data sources play a fundamental role in the data ecosystem because much of the valuable (and reusable) information within organizations and the Web is available as structured data. Publishing these data into KGs is a complex process because it requires extracting and integrating information from heterogeneous sources.

The goal of integrating these sources is harmonizing their data and leading to a coherent perspective on the overall information. Heterogeneous sources range from unstructured data, such as plain text, to structured data, including table formats such as CSVs and relational databases, and tree-structured formats, such as JSONs and XMLs. The integration process of structured data is enabled by mappings that describe the relationships between the global schema of an ontology (the semantic skeleton of a KG) and the local schema of the target data source.

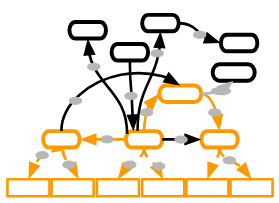

The results of the mapping can be seen as a graph, known as a semantic model, which can express the links between the local schema, represented by the attributes of the target data source, and the global schema, represented by the reference ontologies. A semantic model is a powerful tool for representing the mapping for two main reasons. In the first place, it frames the relations between ontology classes as paths in the graph. Secondly, it enables the computation of graph algorithms to detect the correct mapping.

The research activity related to this project concerns the automatic publishing of data into KGs from structured data sources through a mapping-based approach based on semantic models.

The main goal of this research project is to automatize the process of creating semantic models. This goal is reached by exploiting a novel approach based on Graph Neural Networks (GNNs) to automatically identify the relations which connect already-annotated data attributes. GNNs are a subset of GRL techniques that are specialized in neighborhood aggregation to encode the representation of graph nodes and edges.

In this approach, GNNs are trained on Linked Data (LD) graphs that contain semantic information and act as background knowledge to reconstruct the semantics of data sources: the intuition is that relations used by other people to semantically describe data in a domain are more likely to express the semantics of the target source in the same domain.

The main achievements of this research project are the following:

(i) A PhD Thesis entitled: "Neural Networks for Building Semantic Models and Knowledge Graphs".

(ii) A journal publication: Futia, G., Vetrò, A., & De Martin, J. C. (2020). SeMi: A SEmantic Modeling machIne to build Knowledge Graphs with graph neural networks. SoftwareX, 12, 100516.

(iii) A journal publication: Futia, G., & Vetrò, A. (2020). On the integration of knowledge graphs into deep learning models for a more comprehensible AI—Three Challenges for Future Research. Information, 11(2), 122.

(iv) A workshop paper: Futia, G., Garifo, G., Vetro, A., & De Martin, J. C. Modeling the semantics of data sources with graph neural networks, BRIDGE BETWEEN PERCEPTION AND REASONING: GRAPH NEURAL NETWORKS & BEYOND, ICML 2020 WORKSHOP.

(v) A conference publication: “Training Neural Language Models with SPARQL queries for Semi-Automatic Semantic Mapping” (G. Futia, A. Vetrò, A. Melandri, JC. De Martin), Procedia Computer Science 137, 187-198, describing the use of neural language model called Word2Vec for the semi-automatic semantic type detection.

(vi) A Technical Report: “Linked Data Validity”, carried out by researchers and students that participated in the International Semantic Web Research School (ISWS) 2018.

(vii) A conference publication: “Removing barriers to transparency: A case study on the use of semantic technologies to tackle procurement data inconsistency” (G. Futia, A. Melandri, A. Vetrò, F. Morando, JC. De Martin) - European Semantic Web Conference, 623-637.

(viii) A workshop publication: “ContrattiPubblici. org, a Semantic Knowledge Graph on Public Procurement Information” (G. Futia, F. Morando, A. Melandri, L. Canova, F. Ruggiero) - AI Approaches to the Complexity of Legal Systems, 380-393.